Key Takeaways

- Spot AI-generated images by checking for strange fingers, missing text, lack of texture, background oddities, fashion inconsistencies, and overall perfection.

- Verify an image’s authenticity by checking the metadata for camera info or using AI-detection software.

- Conduct a reverse image search to see if an image already exists online and consider the credibility of the source sharing the image.

Photographs have had the potential to be manipulated since the darkroom ages, but AI gives anyone the technical skills to manipulate an image or generate one from a blank canvas. The first AI-generation software produced graphics that were more laugh-worthy than realistic, but as technology advances, determining what’s real and what’s not no longer as easy as a quick glance. The same software that impresses inside the Pixel 9 Reimagine and X’s Grok simultaneously creates a fear that much of the content we see may soon be more artificial than real.

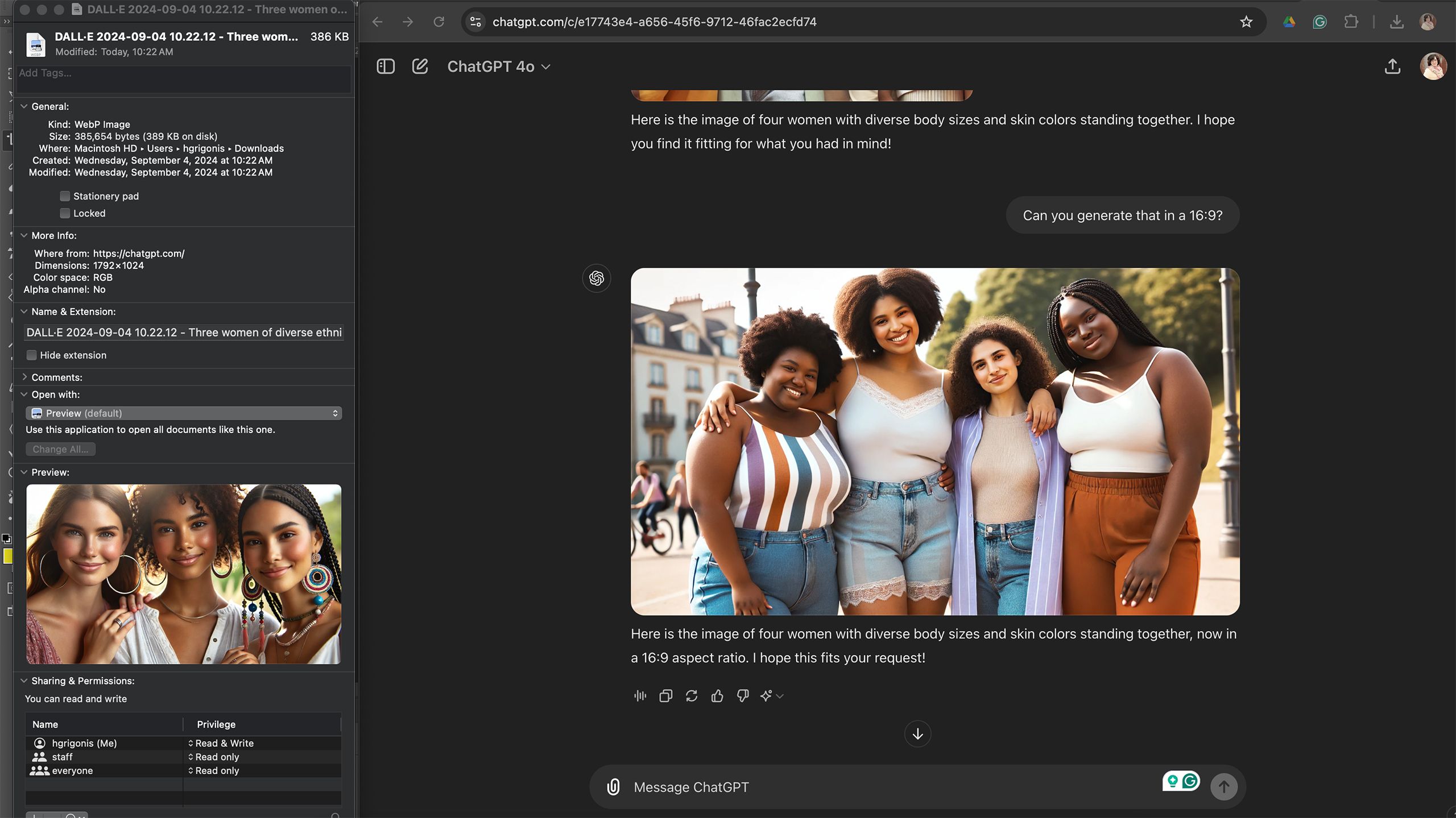

For example, take a look at these two images below. Can you tell which is AI-generated?

Grok

The answer? Both of the images above were generated by AI. As that exercise demonstrates and recent research suggests, images should be approached with the understanding that what you see may not be reality. As generative technology edges closer to the look of a real photograph, developing an eye for what’s real and what’s not is an imperative skill for anyone surfing the internet. As someone who looka at AI images every day, here’s how to tell if an image is AI-generated.

1 Look for strange fingers

Can be a hands-dwon giveaway

Grok

As AI technology steadily improves, the generations have fewer tell-tale signs that giveaway the content’s origination. But, while many AI platforms are improving, there are still a few signs to look for.

The lack of a common AI flaw doesn’t mean that you can say with 100 percent certainty that the image is real. For example, many AI programs seem to know that they can’t produce fingers very well and will tend to create images of people with their hands in their pockets or folded out of view.

Looking for evidence of AI in the image itself is a good first step to determining the authenticity of a photograph.

Related

How to make AI images with Grok on X

Creating AI images on X isn’t as straightforward as other AI image generation tools, but it can be done with a subscription to X Premium

Have you ever tried to draw a pair of hands? AI struggles at creating hands too. AI generations will commonly have too-many fingers, digits jutting at an awkward angle, or ill-defined knuckles. If the image in question is of a person, zooming in on the hands is a good place to start.

2 Missing or illegible text

Don’t just skim words

Grok

AI has only recently gained the ability to replicate text inside an image, but AI still doesn’t excel with it. For example, if I ask AI to create a happy birthday card, it will say happy birthday, but text beyond what I told it tend to be non-letters or wildly misspelled nonsense. Zoom in on things like graphic T-shirts, signs, or even the keys of a laptop and see if the letters are actually letters.

3 Lack of texture

If it looks like there’s a filter, it could be fake

DALL-E

Real skin has texture and so does hair, clothing and much of the surfaces around us. A lack of texture is a major warning sign that the image could be generated by AI. This gives the less realistic generations the feeling of being more painting than photograph, but the most realistic AI generators may require you to zoom in a little closer.

A lack of texture on skin could mean Photoshop or a simple Snapchat filter, but a lack of texture on anything from clothing to a brick wall is a sign of AI.

Lack of texture on anything from clothing to a brick wall is a sign of AI.

4 Background oddities

You can even challenge AI to create messy backgrounds

DALL-E

One of my favorite prompts to use when testing a new AI is to generate an image of a messy desk. Why? The busier an image is, the more the software struggles. Look at the busier parts of the image, including the background, for things that don’t make logical sense or have a cartoonish quality.

5 Missing symmetry and fashion snaffus

Little details can be a big hint

ChatGPT

AI doesn’t always understand physics or cultural standards the way a painter would, resulting in images that should be symmetrical but aren’t and fashion statements that don’t quite make sense. For example, in the image above, the two women on the right have earrings that don’t quite match the other ear. If you zoom in, you’ll also see the part of the middle woman’s necklace is missing. AI can also make nonsensical clothing, such as shirts that are oddly tucked, clothing with too many collars or zippers, or even a shirtless man that somehow still has sleeves.

6 An overall feeling of being too perfect

A New York City street is not that clean

ChatGPT

Generative AI is trained on a large library of images, but like the images in most magazines, many of these training images have a bias towards the modern definition of beauty. While some software is better at creating a diverse range of people than others, in general, the people generated by AI tend to look like models. An AI is more likely to generate a crowd that looks like everyone could be a model where a real image of a crowd, for example, will have a diverse range of body types and skin color.

The data is in the details

Metadata is hidden information that offers details on a photograph’s origins. The metadata on a real photograph will often include details on the camera used to capture it and can even contain copyright data and the photographer’s name. Looking at this embedded information often offers additional insight on where an image comes from.

Most devices already have software capable of reading the metadata. On a Mac, right-click on a photo inside Finder and click on Get Info. On a PC, right click and select Properties, then click on the Details tab.

Mobile devices allow you to read metadata as well. On an iPhone, tapping the “i” icon when viewing an image in Photos brings up this information. On Android, open Google Photos, select the image, tap the “…” menu, then tap Details.

If you see a camera name in the metadata, the odds increase that the image is real. AI-generated photos will often have this information blank, while the more ethical AI software will even list the program’’ name inside the metadata

Metadata isn’t foolproof, however.

Metadata isn’t foolproof, however. You can strip the metadata from a real image, for example, and an image that lists a camera in the metadata still may have been altered in Photoshop. Images uploaded to platforms like Facebook may also have stripped metadata, even when they are real photographs. But, it’s a good tool in gaining insight into how an image was created.

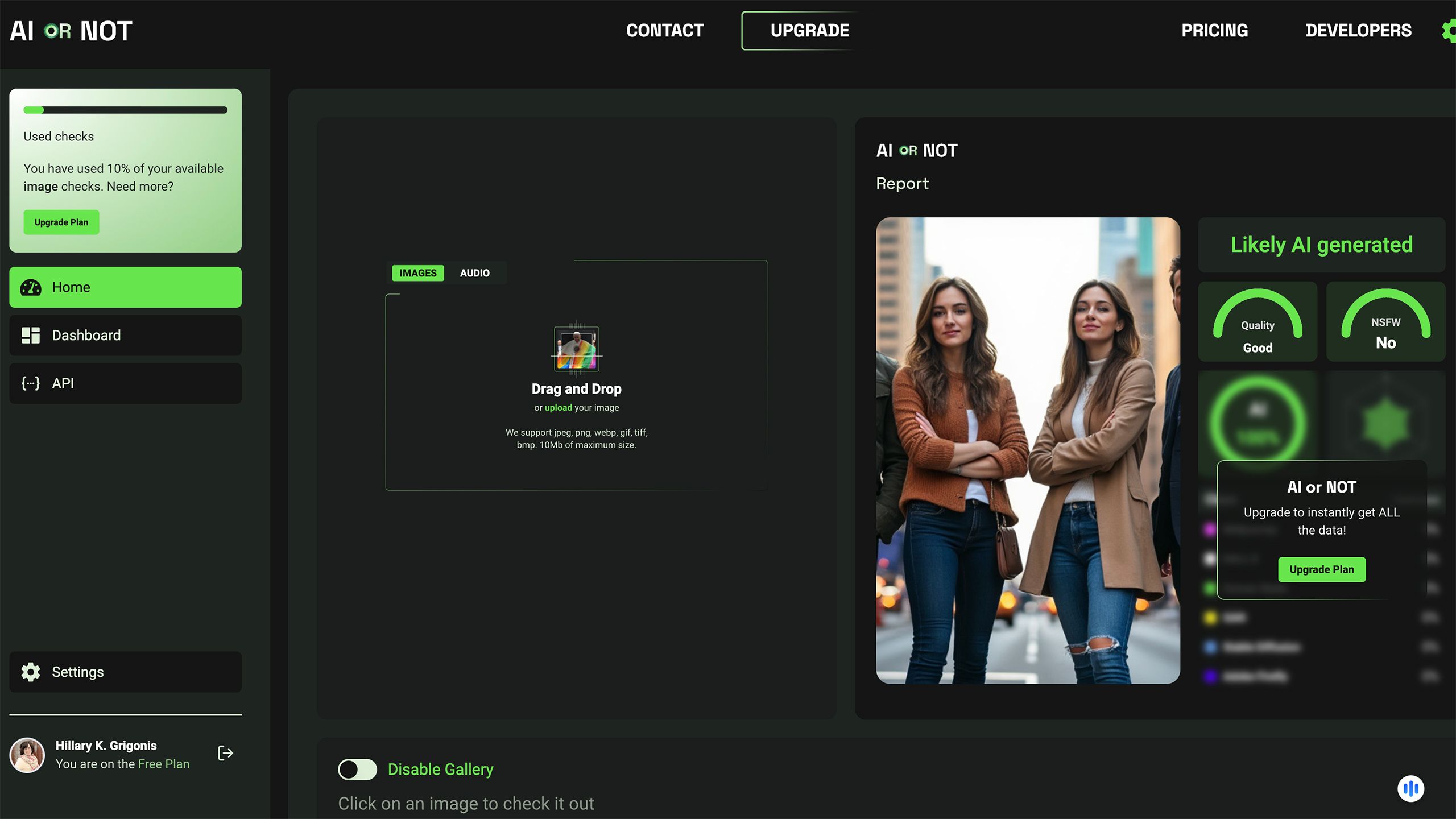

8 Use AI-detection software

Fight AI with AI

A key to the impending war on software-generated content is the ability to fight AI with AI. A number of tools use AI to determine if an image (or even written content) was generated by AI. Using AI to detect AI isn’t perfect — for example, I added an image that I generated in Grok to one and was told it was a real photograph. But, the best detection options out there have a high success rate of determining if an image is AI or not.

One of the more convenient AI detection software is to use a Chrome plug-in. For example, I use the Hive AI Detector Chrome plug-in. When I want to know if an image was AI generated, I simply left click on Hive AI Detector, without the need to download the image.

Other programs include AI or Not or Is It AI, available from a web browser, or mobile apps like GPT Detector and AI Detector.

9 Conduct a reverse image search

The “Locate Source” button is a game-changer

The reverse image search has long been a tool to aid in determining if an image is fake news or not. While the tool works better for spotting doctored images, its use extends to AI as well. Using the reverse image search will tell you if the image already exists on the web by searching for the source or showing similar images.

To use a Google reverse image search, open Google in a web browser, then click on the camera icon in the search bar instead of typing in text. Upload your image, then look through the results for similar images or click the “Locate Source” button.

10 Consider the source

Who — and what — is behind the image?

Like with determining whether something is fake news, look at the person or organization who shared the image. Avoid trusting images from blogs and unknown publications. Check for things like misspelled account names or URLs to make sure the account isn’t trying to pretend to be a legitimate source. And when the content really matters, such as in the case of making informed political decisions, use trusted journalism outlets that don’t fall too far on the left or right on the media bias chart.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel, Adjustable I/O & Fully Ventilated Airflow, Black (MCB-Q300L-KANN-S00)

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel, 120mm Aura Addressable RGB Fan, Headphone Hanger,360mm Radiator, Gundam Edition

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH Handle

be quiet! Pure Base 500DX ATX Mid Tower PC case | ARGB | 3 Pre-Installed Pure Wings 2 Fans | Tempered Glass Window | Black | BGW37

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass, aluminum frame, GPU braces, 420mm radiator support and Aura Sync